Boost your expertise on dbt, the essential open-source framework for transforming your data into a true growth engine for your organization.

In modern data environments, the ability to transform data reliably, collaboratively, and in a governed manner is a central challenge. dbt (Data Build Tool) has emerged as a key tool to address these challenges, particularly in cloud-native and modern data stack contexts.

What is Dbt?

dbt is an open-source framework designed to enable data teams to define, test, and document data transformations directly within data warehouses or lakehouses. It is built on a key principle: data transformation should be managed like code, with versioning, collaboration, and automation within a CI/CD pipeline.

Rather than executing traditional ETL pipelines, dbt focuses exclusively on the T (transformation) part of ELT, leveraging the computing power of data warehouses directly.

How does dbt execute?

The execution of dbt relies on an engine that:

- Reads the SQL models defined by data engineers.

- Resolves dependencies between models.

- Generates the final SQL queries.

- Executes the transformations directly in the target data warehouse or lakehouse.

Execution modes

- Locally, from the developer's environment (dbt CLI).

- Via dbt Cloud, a SaaS platform that orchestrates and schedules executions.

- Integrated into CI/CD pipelines (GitHub Actions, GitLab CI, Azure DevOps, etc.).

Orchestration

dbt does not handle full workflow orchestration (ingestion, ML, etc.), but it integrates with orchestrators like Airflow, Kestra, or Dagster, allowing it to fit into broader pipelines.

The strengths of dbt

1. SQL Centric

The models are defined in SQL, a language widely used by data engineers and analysts. dbt introduces a modular approach, where each model can be tested, documented, and versioned.

2. Dependency management

Models can reference each other using macros, automatically creating a DAG (Directed Acyclic Graph) for transformations. This enables a clear visualization of the entire data processing pipeline.

3. Automated testing & documentation

- dbt enables unit testing (uniqueness, non-null constraints, referential integrity).

- It automatically generates comprehensive documentation, including dependency diagrams.

- The documentation is served through a user-friendly web interface, benefiting both data engineers and business teams.

4. Collaboration and CI/CD

- Each SQL model is versioned in a Git repository.

- Merge request workflows include automated validation (testing, SQL linting, documentation generation).

- dbt promotes best practices in DataOps.

5. Multi-cloud and multi-technology

dbt natively supports multiple data warehouses and lakehouses, making it highly flexible:

Technologie | Supported? |

|---|---|

BigQuery | ✅ |

Snowflake | ✅ |

Redshift | ✅ |

Databricks | ✅ |

Azure Synapse | ✅ (via plugins) |

PostgreSQL | ✅ |

DuckDB | ✅ |

Trino | ✅ |

Clickhouse | ✅ (via plugins) |

Weaknesses & limitations

- Exclusive focus on transformation – dbt does not handle data ingestion or global orchestration. It only operates after data is available in the data warehouse, requiring integration with tools like NiFi, Airbyte, or Fivetran for broader data pipelines.

- SQL-Only approach – While dbt is SQL-first, some complex transformations (e.g., machine learning, advanced data processing) require richer languages like Python or Scala. Support for these is limited, except for dbt Python models in Databricks.

- Managing complexity – In large organizations, the proliferation of models and cross-references can lead to high complexity (cascading dependencies, performance degradation). This demands strict governance.

- Query cost – Since all transformations run directly in the warehouse, poorly optimized models can significantly increase costs, especially on platforms like Snowflake or BigQuery.

Strategic bbenefits for CIOs & CDOs

1. Business & technical alignment

dbt promotes a collaborative model where business teams (analysts) and data engineering teams share the same tools and SQL models. This creates direct traceability between raw data sources and the KPIs displayed in dashboards, ensuring transparency and consistency across the organization.

2. Documentation and governance

- Centralized documentation

- Complete traceability

- Easy Auditing and compliance

3. Agility and time-to-value

dbt accelerates the deployment of new indicators thanks to its modular approach and strong integration with existing DevOps workflows.

Use case example: financial reporting in a mid-sized enterprise

In a multi-subsidiary company, dbt could be used to:

- Load raw accounting data into BigQuery.

- Define an initial layer of dbt models to standardize accounting plans.

- Add transformation models to aggregate expenses and revenues by cost center.

- Automatically test the consistency of these aggregations.

- Generate complete documentation, accessible to financial controllers.

- Publish the final tables, directly consumable by Power BI or Looker.

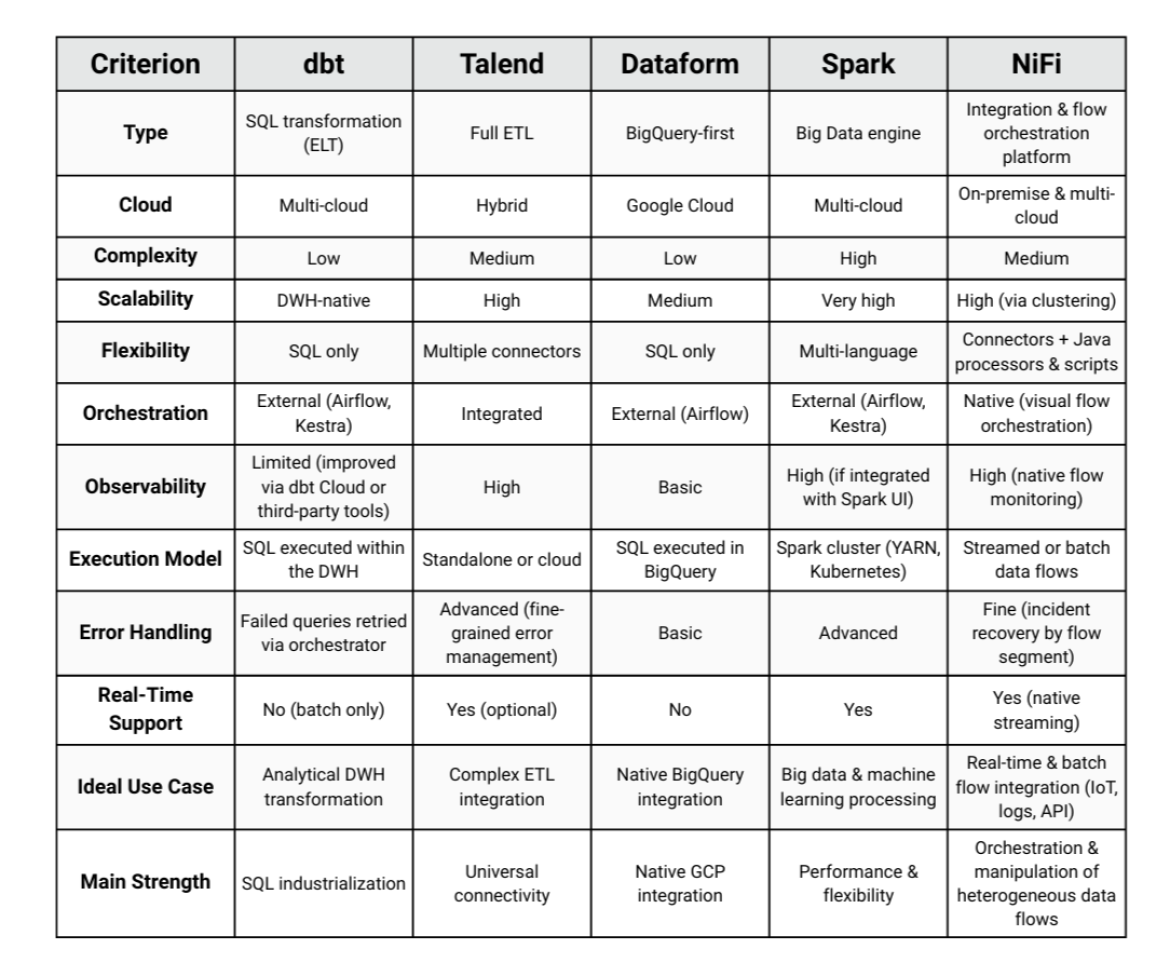

Interesting comparisons

It's important to note that these tools are not always directly equivalent, as they serve different purposes within the data transformation ecosystem.

Why?

dbt is focused on SQL transformation and managing the "T" in ELT. Comparing dbt to a full orchestration solution (like Airflow, Dagster, or Kestra) or an ETL tool (like Talend or NiFi) isn't always meaningful. However, some key criteria can still be compared across these solutions:

There are few strict performance benchmarks comparing dbt to other tools because:

- dbt executes SQL directly within the data warehouse.

- Performance depends on the underlying engine (BigQuery, Snowflake, etc.).

However, some tests indicate that:

- dbt performs as well as manual SQL queries, since it does not introduce any intermediate processing layers.

- For large volumes (1+ TB), dbt is constrained by the data warehouse’s capacity. If the DWH is poorly configured, dbt cannot compensate (whereas Spark can intelligently partition data).

If your target architecture is a Modern Data Stack (Snowflake, BigQuery, Redshift), dbt is almost essential due to its simplicity, compatibility with DataOps processes, and alignment with software engineering practices.

However, for complex multi-source environments, real-time needs, or advanced Big Data cases, it is often necessary to combine it with orchestrators (Airflow, Kestra), ingestion tools (Talend, NiFi), and massively parallel analytical engines (Spark).

Conclusion

Contactez-nous dès aujourd’hui et transformez vos projets data pour accélérer votre transformation numérique ou téléchargez notre dernier livre blanc, "La liste ultime pour construire une application d’IA open source".

Contact us today and transform your data projects to accelerate your digital transformation, or download our latest white paper, "The Ultimate Checklist for Building an Open-Source AI Application."